Securing Key Details

Security is one of the significant aspects when dealing with cloud tech stack (not only cloud any technical stack). Azure key vault is one of the services used to secure essential information such as server details, usernames, and passwords. The initial approach used by a customer was to hard code sensitive details in linked services. XTIVIA recommended using an Azure key vault to secure these sensitive details and implementing the key vault in ADF-linked services.

For more information on Azure key vault, refer to the link below.

https://learn.microsoft.com/en-us/azure/key-vault/general/basic-concepts

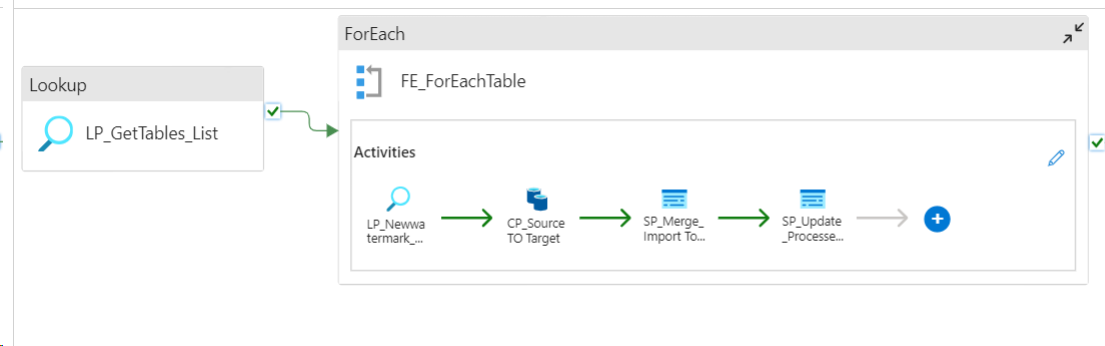

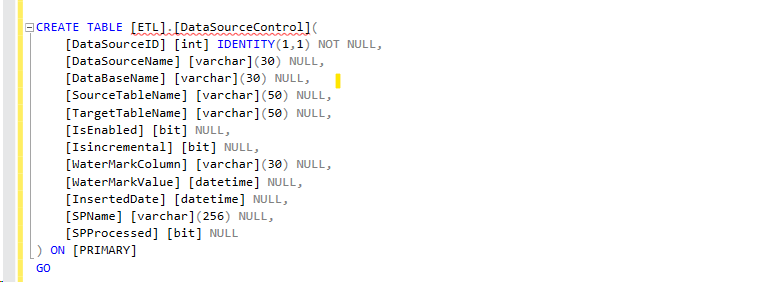

One Pipeline for Multiple Objects

Our client’s legacy solution was based on one pipeline for one object, meaning there are N tables for N number of objects. That leads to heavy usage of resources, which leads to high billing. XTIVIA’s recommended solution was to build a meta-driven model where all the table information is stored in the control table (Such as table name, full refresh or incremental, watermark value, etc..). With this approach, one pipeline will iterate through all the tables, pull the data from the source, and load it into the destination. This approach drastically reduces the workload and the amount of resource consumption. The following screenshot will illustrate one contrail table approach to load multiple tables through a single pipeline.

Reusable Code

The standard for efficiency is to reuse code when applicable. Our customer had to run the same query on 100-plus databases. Creating the linked services for 100 databases is challenging and costly. XTIVIA’s solution was to use the Azure Data Factory – Dynamic Linked service, parameterize the database name through iterations, and run the same query on multiple databases with a single pipeline and a single linked service. The key point here is that reusing code is effective, efficient, and cost-effective when applicable.

Efficient Alert and Audit Mechanism

One of the significant considerations in ETL development is the audit mechanisms. The audit mechanism helps developers understand data migration efficiency. It tracks ETL job performance, captures failures in the execution, and logs error information.

Creating alerts plays a vital role in monitoring your ETL jobs. However, manual monitoring could be more efficient and recommended. Automating the alert process is necessary for efficiency. XTIVIA recommends using logic apps to send custom mail alerts for any failure in ETL jobs.

The below link provides information on logic apps.

https://learn.microsoft.com/en-us/azure/logic-apps/logic-apps-overview

Please contact us if you have any questions about this blog or need help with Azure Data Engineering.