Introduction

Sagemaker Clarify is a popular tool for analyzing the intrinsic behavior of AI models. Typically it is used to explain model predictions. It is especially useful to understand the behavior of black box models and ensemble models. It is available on the AWS cloud and integrates with other Sagemaker tools on the fly. We can invoke Sagemaker Clarify from Sagemaker notebooks.

Nowadays, AI models are used for crucial tasks like buying and selling stocks in real-time. It is imperative to study the model behavior since understanding the model behavior builds confidence. Critical and real-time applications should be analyzed before deployment. Understanding the model behavior is gaining more importance recently since clients are asking for details on how the model actually works. Sagemaker Clarify is a popular tool for understanding model bias and behavior.

This article covers different types of biases, charts for understanding model behavior, variable importance charts, and code snippets. The code snippets explain how to configure and invoke the Sagemaker Clarify. A simple machine learning model for predicting the salary range of employees is studied with Sagemaker Clarify.

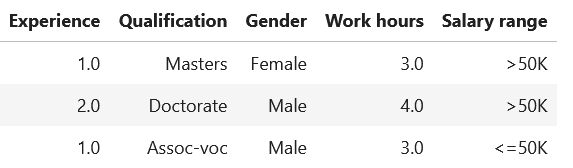

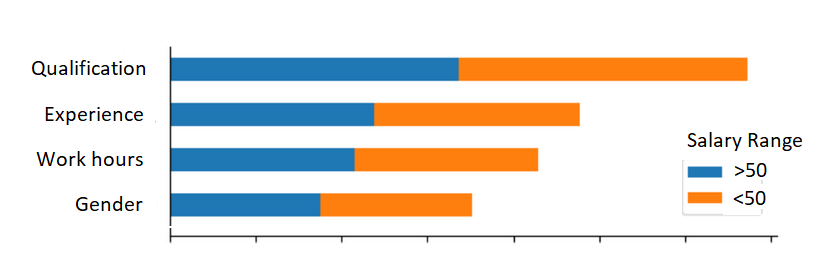

The input data has five variables:

- Experience

- Qualification

- Gender

- Work hours

- Salary range

We will evaluate how the independent variables influence the salary range.

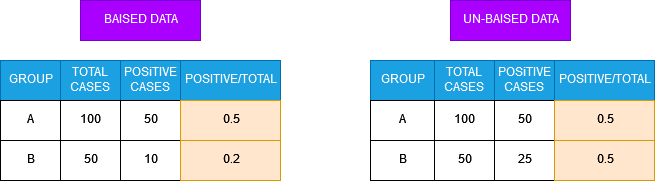

Data Bias

The first step in creating an unbiased model is creating unbiased data. If the data has more positive cases from a particular group (race or gender), the algorithm associates the group with the positive outcome. The developer needs to ensure that the ratio of positive cases and total cases are the same across all groups.

Model Bias

We observed that some AI algorithms show bias towards some groups. For example, an AI model for approving loans may reject loans from some genders. Bias from computer programs is an age-old problem, it is not a new phenomenon. In 1988, the UK Commission for racial equality found that a computer program for selecting medical students showed bias against women and non-European names.

Governments across the world are creating laws and regulations preventing the bias of AI models. In the US, the Federal Trade Commission created laws that prevent the usage of biased AI models in the Finance and recruitment domains. The developer needs to prove the fairness of the model before publishing. Proving the fairness of models helps prevent lawsuits against the owners and developers of the models.

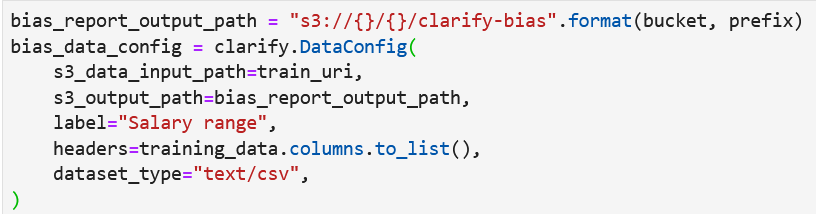

Configure the Clarify tool with arguments.

Specify the S3 bucket locations of input data and output folder.

Add the target variable with a label argument.

Specify the variable on which we should test the bias.

Clarify creates graphs at the output location.

Model Explainability

Machine Learning professionals are often curious about why the model has given a particular prediction. This is especially true when the model prediction is erroneous, and the developer wants to find the reason for the model failure. Knowing the root cause helps in making the necessary changes to the input features.

Typically the model behavior is categorized into two types:

- Global Behavior: How the input variables are responsible for the overall predictions.

- Local Behavior: How the input variables are responsible for single data input or a small range of data input.

Global Behavior Explainability

This Explainability Report displays the importance of each variable in the machine learning model in descending order. The Clarify tool internally uses the KernelShap method for calculating the importance of features.

Local Behavior Explainability

We can get the model behavior and reports for single data input. This helps debug the machine-learning models. The local explanations are stored at the output path. We can get the local explanations by filtering for a particular record.

If you have questions regarding AWS Sagemaker or need help with implementation/support, please engage with us via comments on this blog post, contact us using our form, or e-mail [email protected].